What to Do if You Have to Request a Lot of Websites Python

Last year, I started Freelancing as a Web Scrapper using requests and beautifulsoup modules. Later on a few projects, I faced a strange issue while scrapping a website.

that website was using some Javascript code. I was unable to solve the javascript rendering Problem with the Python requests module.

This fabricated me wonder and I started researching to expect if at that place is a python library that tin help me solve the Javascript rendering Trouble. And it turns out that nosotros have requests-html library for Javascript rendering problem.

In this commodity I will explain you the easiest ways of web scraping, using python requests-html library.(alert-success)

Web Scraping

Web Scraping is extracting the required information from a webpage. For me, Information technology was a practiced source of income, when I started Freelancing with Python.

If y'all know Python basics, then Learning web scraping will be no less than fun for you. you tin do many interesting things withscrapping websites in Python.

Python Programming is a good choice if y'all ever think of web scraping. Python offers dissimilar libraries to scrape websites. requests-html is a expert example of a Python library for web scraping.

What is requests-html?

requests-html is a python library for scrapping websites. information technology can aid you scrape any blazon of website including the dynamic websites. requests-html back up javascript rendering and this is the reason information technology is different from other python libraries used for web scraping.

Python requests-html module is the best library for web scraping. Once y'all learned requests-html, Scrapping websites will be a piece of cake for you. You volition understand at the terminate of this requests-html tutorial.

JavaScript rendering

When the developer uses Javascript to manipulate the Document Object Model (DOM) Elements, it is called Javascript rendering. In simple words Javascript rendering means, using Javascript to prove output in the browser.

Example of Javascript Rendering

<!DOCTYPE html> <html lang="en"> <caput> <title>Document</title> </head> <body > <!-- H1 chemical element volition be create in body using Javascirpt --> <script blazon="text/javascript"> var h1_tag = document.createElement("h1"); h1_tag.innerHTML = "H1 Generated with Javascirpt"; body_tag = certificate.getElementsByTagName('torso')[0]; body_tag.appendChild(h1_tag); </script> </trunk> </html> Why should you use requests-html?

requests-Html solves the Javascript rendering trouble, this is the reason y'all should use the requests-html library in python. In that location are requests, beautifulsoup, and scrappy used for spider web scraping, but requests-html is the easiest manner to scrape a website among all of them.

Features of Python requests-html library

- Async Support

- JavaScript support

- cookie persistence

- parsing abilities

- Support Multiple Selectors

yous can apply the requests-html python library, to parse HTML files without request. Javascript rendering is likewise supported for local files. See Example

How to use the requests-html library?

When you are scrapping websites with the python requests-html library, you should follow the following steps to extract the information.

Pace 1: Discover the target element on the web folio.

Step 2: Inspect the target element that you want to extract.

Stride 3: utilise the Proper selector (ID, Class proper noun, XPath)

Step 4: Get the Target element using the requests-html library

Install requests-html library in Python

Before doing anything else, first of all, we need to install the requests-html library. requests-html is not a built-in module just can be easily installed. Depending on your system y'all should follow different approaches to install requests-html.

Install requests-html using pip

pipis probably the easiest mode to install a python packet. yous can utilize pip to install requests-html library.

copy the code and run it on the final to Install the latest version of requests-html library

python -m pip install requests-html copy the code and run it on the concluding to install a specific version of requests-html

python -m pip install requests-html==0.x.0 If you want to upgrade the already installed requests-html library then run the post-obit command on last

python -m pip install --upgrade requests-html Install requests-html using conda

To install the latest version of requests-html using conda enter the following command and run it.

conda install requests-html Install requests-html in jupyter

Jupyter is a good IDE for working with Web-scrapping related projects. In Jupyter you lot can install requests-html usingpip install requests-html.

pip install requests-html Install requests-html in Linux

If you lot are using Linux operating system. First, install pip and then using pip you can install the requests-html library.

pip install requests-html Inspecting elements on a Weg Page

In scrapping a targeted element from a web page, the first step is to discover that specific element on a web folio. This procedure is known as inspecting elements. It is a 3-stride procedure.

Follow these steps to inspect an chemical element on a web folio.

- Go to the specific webpage using the URL.

- right-click on the Target element that you want to Extract.

- Click on inspect and it will open the inspection window.

Instance of Inspecting elements on a webpage:

Let's say we want to scrape this webpage [https://www.hepper.com/well-nigh-beautiful-domestic dog-breeds/] .

Step i: Copy and paste the URL into your browser Search bar.

Step 2: Click on Target Element.

Let's say yous want the first section to catch. Just correct-click on information technology.

Pace three: click on the last optioninspectin the options menu shown in the above picture.

Afterwards clicking on inspect, You volition the inspection windows open inside the tab. yous tin can now go the HTML code of the chemical element.

This is how we inspect the target elements. To better understand use the [https://webscraper.io/] website for testing purposes. (warning-success)

Using unlike types of selectors in requests-html

the requests-html library supports all kinds of selectors. We can select an element using the tagName, id, class, or XPath. In this department, I will guide you on how to use different CSS selectors to grab an element

Select element using id in requests-html

The best way to select an element is to utilize the id of that element. Using ID is the best option, as we only have one id on a webpage. Id is a unique selector.

to select an element using the id in requests-html, use the r.find('#id') method.

Instance No 1: Select an element of a webpage using the Id

For test purposes utilise the https://webscraper.io webpage.

We will Catch the navbar with id 'navbar' from this website using the id of the chemical element.

# importing the HTMLSession class from requests_html import HTMLSession # create the object of the session session = HTMLSession() # url of the page web_page = 'https://webscraper.io/' # making get request to the webpage respone = session.get(web_page) # getting the html of the page page_html = respone.html # finding element with id 'navbar' navbar= page_html.find('#navbar') # printing element print(navbar) The output of the lawmaking is a navbar chemical element

Select element using the class proper noun in requests-html

Just similar the id, we can observe an chemical element using the class name. A class can be assigned to more than one element and this is the reason that finding an element by the course proper name volition render a list of elements. Y'all tin apply the r.find('.className') function to detect an element past class name in requests-html.

Case No 2: Select an chemical element by using the class proper noun in requests-html

In this example, we volition catch the video on the home page of [https://webscraper.io/] website. On inspecting the video, the class name of the video is "intro-video-wrapper". So I will use this class name to find the video URL.

# importing the HTMLSession class from requests_html import HTMLSession # create the object of the session session = HTMLSession() # url of the folio web_page = 'https://webscraper.io/' # making get request to the webpage respone = session.get(web_page) # getting the html of the folio page_html = respone.html # finding element with grade proper name 'embedded-video' video_frame= page_html.discover('.embedded-video') # get all atributes video_attrs = video_frame[0].attrs # find the url using dict.get() video_url = video_attrs['src'] # printing element impress(video_url) The output of the lawmaking is the URL of the youtube video.

Select elements using tag name in requests-html

To find an chemical element using the tag proper noun of an element using the requests-html, utilise the r.find('tagName') function. It volition render the listing of all specific tags.

This is the most general case, where you want to find all similar tags, permit's say you want to get the all the rows of a table. Or maybe list items of a list.

Example No 3: Select a specific tag with requests-html

In this case, we desire to scrape all the paragraph tags from the [https://webscraper.io/] website.

# importing the HTMLSession course from requests_html import HTMLSession # create the object of the session session = HTMLSession() # url of the page web_page = 'https://webscraper.io/' # making get request to the webpage respone = session.get(web_page) # getting the html of the page page_html = respone.html # finding all the paragraphs all_paragraphs= page_html.find('p') # press listing of paragraphs impress(all_paragraphs) The output of the code is a list of all paragraph elements

Select element using CSS aspect in requests-html

Besides the id and the form proper noun, we tin employ other CSS attributes to go the elements from the webpage. To scrape an element using the CSS attributes employ the discover('[CSS_Attribute="value"]') function. Information technology will catch the specified elements from the webpage.

with requests and beautiful soup, you can achieve the same results but you will have to take an extra step. This is the beauty of the requests-html library.

Example No four: Select HTML elements using the CSS attributes in requests-html library

In this example, we will use the same website to take hold of the header. The header has an aspect 'office' and its value is 'imprint'. And so we will use requests-html to find the header using 'office' as a CSS selector.

# importing the HTMLSession class from requests_html import HTMLSession # create the object of the session session = HTMLSession() # url of the page web_page = 'https://webscraper.io/' # making become request to the webpage respone = session.get(web_page) # getting the html of the folio page_html = respone.html # finding elements with the CSS attribute 'office' header= page_html.find('[part="banner"]') # printing the element print(header) The output of the lawmaking is a list of the elements with the 'function=banner' aspect.

Select chemical element using text in requests-html

Well, the ability of requests-html fifty-fifty increases more with this amazing characteristic of finding an element using a text within the element. To find an element based on certain text, you can apply the r.find('selector',containing='text') function. this will render a list of all elements containing that particular text.

Example No five: Find an chemical element on a folio based on text in requests-html

In this Python code example, we will detect all the paragraphs containing the 'web information extraction' text in it.

# importing the HTMLSession class from requests_html import HTMLSession # create the object of the session session = HTMLSession() # url of the page web_page = 'https://webscraper.io/' # making get request to the webpage respone = session.get(web_page) # getting the html of the page page_html = respone.html # finding elements based on text p_tag_with_text= page_html.find('p',containing='spider web data extraction') # printing the element print(p_tag_with_text) The output of the code is the list of paragraph tags containing the 'spider web data extraction' tag in information technology.

Select element using xpath in requests-html

When yous want to get the HTML element in the about easiest fashion but in that location is no id of that chemical element. worry not we have the XPath pick in requests-html which make it easy to find an element in a webpage.

XPathcan be used to navigate through elements and attributes in an HTML document. If you do not know how to create XPATH to an element. Give a read to this Microsoft article about XPATH.

Case No 6: Find an chemical element with XPath in requests-html library

In this example, we have used the XPath of the element to get the specified chemical element with requests-html.

# importing the HTMLSession class from requests_html import HTMLSession # create the object of the session session = HTMLSession() # url of the folio web_page = 'https://webscraper.io/' # making get request to the webpage respone = session.get(web_page) # getting the html of the page page_html = respone.html # finding all divs which have h2 kid using xpath divs_parent_to_h2= page_html.xpath('//div//h2') # printing the elements listing impress(divs_parent_to_h2) The output of this lawmaking is the list of 'div' elements that have 'h2' child.

Get text from HTML element in requests-html

Most of the time our target on the webpage is extracting text from unlike HTML tags. Then I defended this department to explain to you how to excerpt texts from unlike Html elements.

To go the text of any HTML element in python utilize the following steps

- Footstep 1: Install the requests-html library

- Stride 2 : create HTML Session

- Step 3: make a get request using requests-html

- Footstep 4: go all the HTML from the response

- Footstep five : employ the find() function to find elements

- Stride 6: get the text from all the elements using the text attribute of the element.

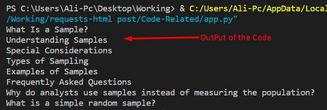

extract the text of h2 tags in requests-html

The WebPage is https://www.investopedia.com/terms/s/sample.asp

The Target is to excerpt text from all <h2> Tags

The code to excerpt text from all h2 tags is post-obit

# importing the HTMLSession form from requests_html import HTMLSession # create the object of the session session = HTMLSession() # url of the page web_page = 'https://www.investopedia.com/terms/due south/sample.asp' # making become request to the webpage respone = session.become(web_page) # getting the html of the page page_html = respone.html # finding all h2 tags h2_tags= page_html.find('h2') # extracting text from all h2 tags for tag in h2_tags: impress(tag.text) The output of the above lawmaking is the text of all h2 tags

Scrape the text of a Paragraph with requests-html

In this example, nosotros volition use the Python library requests-html to extract the text of a paragraph.

The website to scrape information from is [https://totalhealthmagazine.com/About-U.s.]

Our target is to get the obviously text from the paragraphs using the requests-html library in Python

The Python lawmaking to scrape text from all paragraphs using the requests-html library is post-obit.

# importing the HTMLSession course from requests_html import HTMLSession # create the object of the session session = HTMLSession() # url of the folio web_page = 'https://totalhealthmagazine.com/About-Us' # making get request to the webpage respone = session.get(web_page) # getting the html of the folio page_html = respone.html # finding all p tags p_tags= page_html.find('p') # extracting text from all h2 tags for tag in p_tags: print(tag.text) The output of the Above Python code is the text of all paragraphs present on that page

detect the meta tags of a website using requests-html

Meta tags are the tags that hold information nearly the sites. Meta tags are not used to evidence elements on the webpage. They are very important for the website.

We can use requests-html library to find all the meta tags of a webpage

post-obit is the Python requests-html library code that finds the meta tags of the website

# importing the HTMLSession class from requests_html import HTMLSession # create the object of the session session = HTMLSession() # url of the folio web_page = 'https://totalhealthmagazine.com/Near-Usa' # making get request to the webpage respone = session.become(web_page) # getting the html of the page page_html = respone.html # finding all meta tags meta_tags= page_html.detect('meta') # extracting meta tags html for tag in meta_tags: print(tag.html) The output of the above python code is the list of all meta tags of the website

Scrape all links from a website in Python with requests-html

To scrape all the anchor tags or <a> tag from the website requests HTML gives the states the simplest and best style.

utilize the response.html.links() function to get all the links from a webpage. or you tin can use response.html.absolute_links information technologyto excerpt the absolute links.

following is a python code that extracts all the links from a website (https://www.trtworld.com/)

# importing the HTMLSession class from requests_html import HTMLSession # create the object of the session session = HTMLSession() # url of the folio web_page = 'https://www.trtworld.com/' # making get request to the webpage respone = session.get(web_page) # getting the html of the page page_html = respone.html # finding all <a> tags all_links= page_html.links # extracting meta tags html for tag in all_links: print(tag) # getting merely accented links absolute_links = page_html.absolute_links for abs_link in absolute_links: print(abs_link) The output of the above python lawmaking is all the relative and absolute links available on that website

observe championship

Finding a folio championship is easy with requests HTML. Of course, there are other ways effectually just the best way to find the title of a webpage with python is to use theobserve() function of the requests-html module.

Below is the Python code that finds the title of a webpage using the requests-html library.

from requests_html import HTMLSession session = HTMLSession() web_page = 'https://edition.cnn.com/' respone = session.get(web_page) page_html = respone.html title= page_html.detect('title')[0].text print(title) The output of the above code is the title of the website

What is HTML Session in the requests-html library?

In requests-html a Session is a consumable session, for cookie persistence and connectedness pooling, amongst other things.It is a group of actions that can take place in a time frame.

Default HTML Sessoin

Only one HTMLSession can exist active in normal cases. And users tin interact with only ane webpage at a given time frame.

Async HTML Session

Each Async Session is created in its own thread, so multiple Async sessions tin exist created in a unmarried program. Multiple spider web pages tin be scraped at the same time.

Example No vii: Scrapping 3 webpages at the same time with Async HTML session in requests-html

3 web pages are scraped at the same time. The output is unexpected is that 1 spider web page might get scraped early than the other.

from requests_html import AsyncHTMLSession asession = AsyncHTMLSession() async def get_cnn(): r = await asession.get('https://edition.cnn.com/') title = r.html.find('title')[0].text impress(championship) async def get_google(): r = await asession.go('https://google.com/') title = r.html.find('championship')[0].text impress(title) async def get_facebook(): r = expect asession.get('https://facebook.com/') title = r.html.find('title')[0].text print(title) asession.run(get_google, get_facebook,get_cnn ) The output is the titles of these 3 webpages

Javascript rendering in requests-html

Javascript rendering Trouble is solved with the requests-html library in python. Javascript support by requests-html makes it piece of cake to scrape websites that employ javascript for rendering HTML.

We tin scrape elements that are generated past javascript and shown on the browser with the assist of the requests-html library

Example No 8: In this example, we will scrape [https://www.geeksforgeeks.org/]

from requests_html import HTMLSession session = HTMLSession() res = session.get("https://www.geeksforgeeks.org/") res.html.return(timeout=10000) print(res.html.text) The output of the code is the text that is generated after the execution of the Javascript code

How Pagination works in requests-html Library

You might have seen any social network sites, that use pagination to render elements on a webpage. You will see 2 or three posts on the current screen. simply when you keep scrolling it renders most posts. this is done with the assist of pagination.

It is hard to scrape websites that use pagination with other python libraries. requests-html python library is the all-time option in this scenario to scrape a folio with pagination

Example No 9: In this instance, nosotros volition scape URLs from dev.to website.

we could probably use Facebook, Twitter or other social networking sites, but they demand you to authenticate yourself. which you know will demand us to take an extra step.

from requests_html import HTMLSession session = HTMLSession() response = session.get('https://dev.to/') for html in response.html: print(html) The output of this code is the URLs of the post available on the domicile folio of the website and it will keep on scrolling. buuuutttt the output is not what we expected, THe pagination property is non currently working. Equally they say, it is continuously improving. I mentioned this section, maybe in future, it starts working. Hope for the best

Quiz Solver Python Plan

Allow's say you take a webpage and you lot are given questions to solve them using that webpage. Instead of looking through the webpage, yous can use requests-html to answer your quiz questions. This is a fun programme you lot can show your friends.

Let'south say I want to answer questions from [https://www.geeksforgeeks.org/string-data-construction/?ref=shm] this webpage. This webpage is all about strings in Python.

In the following example, nosotros have used python as a programming language to reply questions from that detail webpage.

from requests_html import HTMLSession session = HTMLSession() res = session.become("https://www.geeksforgeeks.org/cord-information-construction/?ref=shm") reply = res.html.search('Strings are divers as an {} of characters.')[0] print(reply) The output of the code is the answer to the blank space

dissimilar HTTP asking methods in requests-html python

You can send different types of requests using the requests-html library in Python. Different types of requests to the server render unlike responses. To get the information from the server we employ the get request.

HTTP delete request with the requests-html library in Python

we utilise the HTTP delete request to delete a resources from the server. To make an HTTP delete asking with the requests-html library in python employ thesession.delete() part.

Case No x: Making an HTTP delete request in python with the requests-html library

In the below case python code nosotros accept used the requests-html library to brand an HTTP delete request to [https://httpbin.org/delete].

from requests_html import HTMLSession session = HTMLSession() url='https://httpbin.org/delete' user_to_be_deleted ={ "user":'alixaprodev' } response = session.delete(url, data=user_to_be_deleted) impress(f'Condition Code:{response.status_code}') print(f'Asking Type : {response.request}') ## output ## # Status Code:200 # Request Blazon : <PreparedRequest [DELETE]> HTTP become asking with parameters using the requests-html library in Python

HTTP GET asking method is used to request a resources from the server. While you are making a get request the server does non change its state. This is normally used for retrieving data from a URL.To brand a go request with requests-html in python, employ thesession.get() function.

Example No eleven: In this example, we will exist making a go request along with a parameter.

from requests_html import HTMLSession session = HTMLSession() # url to make a become request to url='https://httpbin.org/get' get_user ={ "user":'alixaprodev' } # making become request response = session.go(url, data=get_user) print(f'Status Code:{response.status_code} ') print(f'Asking Blazon : {response.asking}') ## output ## # Status Code:200 # Request Type : <PreparedRequest [GET]> HTTP Post request using the requests-html library in Python

HTTP post request is used to modify resources on the server. Information technology is used to send information to the server in the header, not in the URL. To make a postal service request with requests-html in python, use thesession.post() role.

Example No 12: Utilize requests-html library in python to make a Post request.

from requests_html import HTMLSession session = HTMLSession() # url to brand a post request to url='https://httpbin.org/post' post_user ={ "user":'alixaprodev', "pass":'password' } # making post request response = session.post(url, information=post_user) print(f'Content of Request:{response.content} ') print(f'URL : {response.url}') ## output ## # Content of Request:b'{ \due north "class": {\northward "pass": "password", \n "user": #"alixaprodev"\due north } # URL : https://httpbin.org/postal service Frequently Asked Question

requests-html is fun when it comes to web scraping. It has made my life easier. Some of the questions that people asked on different forums are following. that I wanted to answer.

what is the difference between beautifulsoup and requests_html?

Python beautifulsoup library is used for parsing HTML code and grabbing elements from HTML certificate while requests-html is even more than powerful library that can practise HTTP requests to the server as well. requests_html combine the features of beautifulsoup and requests library.

what is the difference betwixt the python requests module and requests_html?

requests module is used to make unlike types of HTTP requests to the server while requests_html is a more specialized version of the requests library, which tin can help us in HTML parsing and even solve the javascript rendering problem.

how practice I utilize python to scrape a website?

To scrape a website in python, apply the python requests-html module.

is spider web scraping legal?

No, Scrapping a website is not legal until the website owner gives you permission to. At that place are a lot of websites that do not want yous to scrape but alternatively other want you to scape them. It depends on the website that you lot are scrapping.

Who Develop requests_html?

requests-html is a python library which is developed by kennethreitz

Errors and debugging

no module named 'requests_html'

if You are facing this error. It ways that you demand to install the requests-html library. employ the pip command to install requests-html.

pumphreyfroir1940.blogspot.com

Source: https://www.alixaprodev.com/2022/04/python-requests-html-library.html

0 Response to "What to Do if You Have to Request a Lot of Websites Python"

Post a Comment